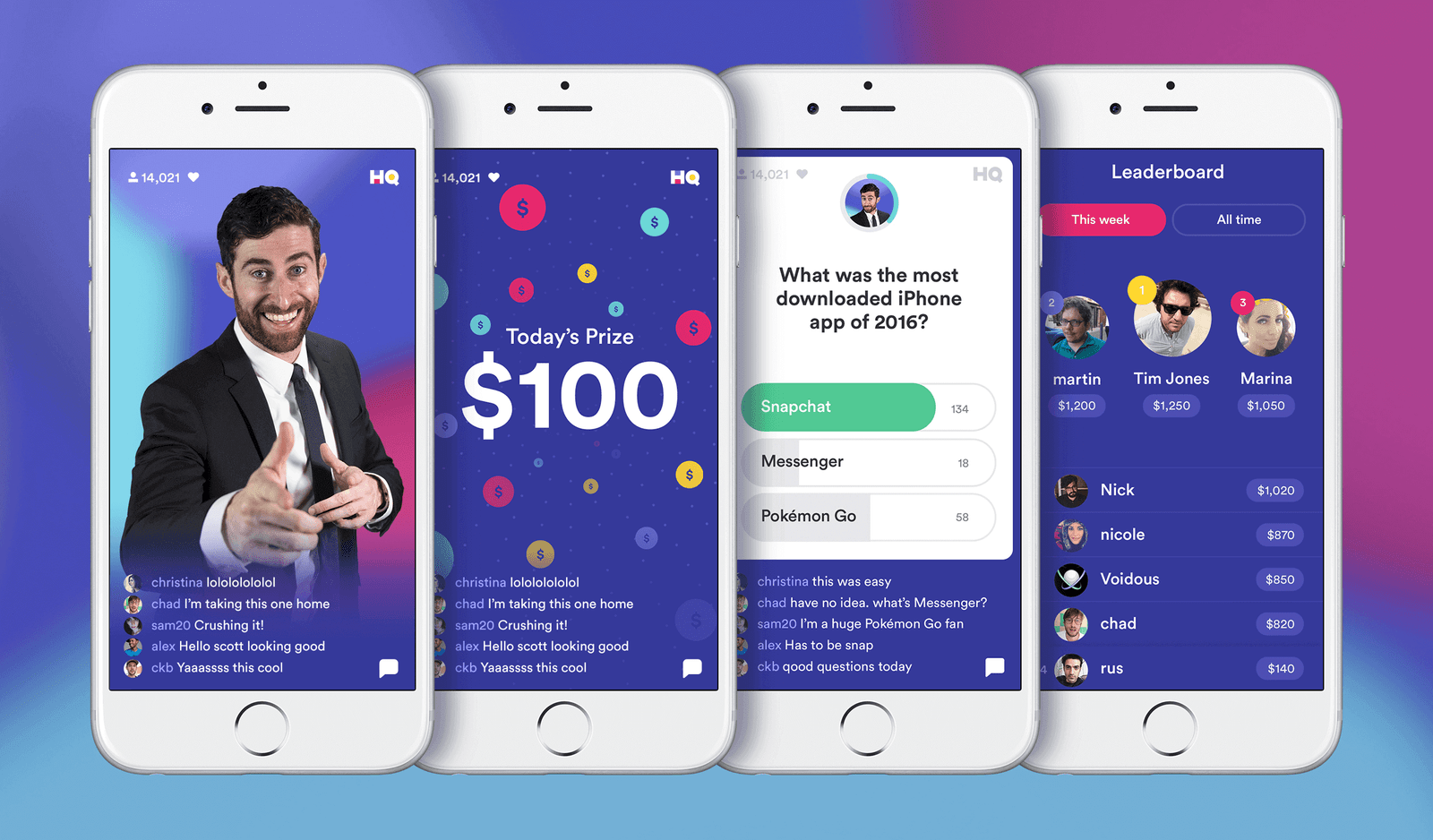

Does anyone remember the fall of 2017? That’s when HQ Trivia took the world by storm. This live mobile trivia game initially featured 10 questions and ran only twice a day, attracting millions of eager players vying for cash prizes. My friends and I were no exception, gathering together in a Discord channel every day to help each other progress through the game. Most of the time, we were knocked out by obscure or tricky questions early on, never making it past the 6th or 7th round.

During one of our discussions, we shared tips and tricks for answering questions correctly. One friend suggested designating a person to Google the answers, but they could never type fast enough before the timer ran out. Another mentioned using Siri, but her transcription errors proved costly. That’s when I had an idea: could we automate the entire process?

Before diving in, let me clarify that I don’t condone cheating. This was purely a learning exercise. I set out to create a Python script that could leverage the power of Google search for HQ Trivia. At the time, Google Web Search API had been deprecated and what was available was severely limited. It had no intelligent search capabilities, which I needed as part of my scoring process to get the right answer (more on this below). Using Selenium was the solution.

Building the Python Script

The plan was to mirror my device onto my desktop, take a screenshot of the game screen which gets saved directly onto my computer, and run the Python script. I combined taking the screenshot and running the script into a single step by using a macro bound to a hotkey.

The script performed the following tasks:

- Read in the newest screenshot from disk

- Apply OCR to convert image to text using pytesseract

- Run a constrained Google search using search operators and Selenium

- Parse results and identify the answer choice that appears most/least often

- Recommend the most likely answer

I won’t get into how to use Selenium for automation or tesseract for OCR, since those deserve their own blog posts. Below, I explain how I performed the search and then scored the results to predict the correct answer (80% of the time). The Github repo is here.

Predicting the Correct Answer

Most people were simply entering the question into the search bar, which works fine for human eyes. However, it’s more complicated for a computer because the correct answer isn’t always the first result. To overcome this, I used Google Search Operators, and I constrained the search to also include the three possible answers. This approach relies on Google search’s site relevance and quality ratings, which will naturally hoist more instances of the correct answer to the first page. I actually tried this with Bing, and it was 25% less accurate at predicting the correct answer using the same set of questions.

# Query using google, exclude the '?' char in the question

query_str = '(' + hq_question[:-1] + ') AND (' + ' OR '.join(hq_answers) + ')'

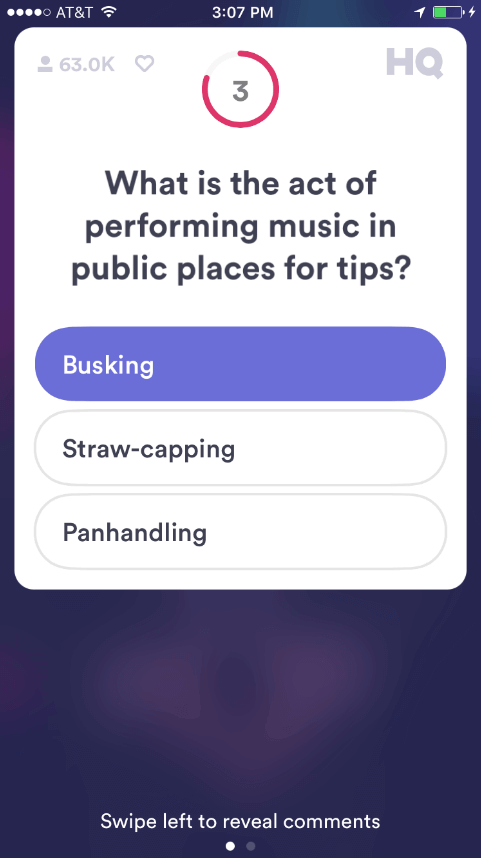

browser.get('https://www.google.com/search?q=' + query_str)The query string for this question would be:

(What is the act of performing music in public places for tips) AND (Busking OR Straw-capping OR Panhandling)Next, I analyzed all the results on the first page, noting any mentions of the three possible answers in each result. I assigned a 2x weight to results from Wikipedia and penalized results that mentioned more than one answer. For example, a result that mentioned two answers had its weight halved, while one mentioning all three answers had its weight reduced by a third.

# Create a 2D array to store instance count of each answer in returned search

results = np.zeros([len(search_results), len(hq_answers)])

for i, result in enumerate(search_results):

for j, answer in enumerate(hq_answers):

# First, pick the longest word in the answer to use for substring match

# (relevant for multi-word answers)

ans_split = re.split(' ',answer)

wordLength = [len(w) for w in ans_split]

longestWord_idx = np.array(wordLength).argmax()

# Quick-n-dirty way to remove plural suffixes...

if ans_split[longestWord_idx][-3:] == 'ies':

ans = ans_split[longestWord_idx][:-3]

elif ans_split[longestWord_idx][-1] == 's':

ans = ans_split[longestWord_idx][:-1]

else:

ans = ans_split[longestWord_idx]

# Find any instance of the answer in each returned search result

# (case insensitive)

results[i][j] = any(re.findall(r'(?i)\b'+ans, result))

# Weigh wiki results more

if 'Wikipedia' in result:

results[i][j] *= 2

# Down-weigh search result instances that contain more than one answer option...

# This is bc there can only be one correct answer!

R = results/(results>0).sum(axis=1)[:,None]

# Replace NaN elements with 0

R[np.isnan(R)] = 0Finally, I squared the weights before summing them up for each answer choice to increase the effect of the weights, and then I recommend the answer with the highest score. However, if the question was an inversion type (e.g., “Which among the following is NOT a fruit? Apple, Orange, Fish”), the lowest score is selected as the answer.

if R.sum() == 0:

print('dunno')

elif ' NOT ' in hq_question or 'except' in hq_question:

# If this is an inversion-type question, then we want the minimum

print(hq_answers[np.dot(R.T,R).diagonal().argmin()]) #lowest "variance"

else:

print(hq_answers[np.dot(R.T,R).diagonal().argmax()]) #highest "variance"And that’s it!

Did It Work?

As of April 25, 2018, it worked well after the second or third question, providing the correct answer about 80% of the time. Not bad for something so simple as counting the number of search hits. Interestingly, the first 2-3 questions were often the easiest for humans but proved tricky for my little algorithm. Those early questions seemed specifically designed to weed out bots playing the game… and probably also people who tend to overthink. (How many stop signs are there really in the Google captchas??)

Concluding Thoughts

What about training a machine learning model to find the answer among the search results? It’s a good idea, and perhaps someone has done that by now. However, at the time, HQ Trivia was still new to the scene and with only two games per day, the training set was too small. Nowadays, ChatGPT would be the tool to use, but things were a lot different back in the days of early 2018. The field has certainly advanced greatly since then!

Of course, as it turns out, others were experimenting with similar ideas and even took it a step further to create bots to cheat in HQ Trivia. I never used my code for cheating, as from the very beginning, my project was just a proof-of-concept and an opportunity to explore Selenium. Please also note that using automated means to scrape from Google’s website was once against their TOS, and it might still be.

Looking back, HQ Trivia was a fun, social experience that brought friends together, while also sparking my curiosity in coding for automation. It’s a reminder that we can learn a lot from our hobbies and find ways to combine our passions with personal growth.